SEARCH

ALL NEWS

IEEE SaTML 2025 Conference Contribution

Dr. Thorsten Eisenhofer will present the paper “Verifiable and Provably Secure Machine Unlearning,” at SaTML 2025. Eisenhofer is Postdoc in the research group “Machine Learning and Security”. His paper introduces a new framework designed to verify that user data has been correctly deleted from machine learning models, supported by cryptographic proofs.

Researcher Spotlight: Lorenz Vaitl

Dr. Lorenz Vaitl defended his PhD in the field of Machine Learning, focusing on optimizing the simulation of quantum fields. His doctoral thesis, "Path Gradient Estimators for Normalizing Flows," explores how machine learning techniques can accelerate data-intensive simulations, which are crucial for understanding fundamental physical phenomena.

New BIFOLD professorship at Charité

Welcome to Grégoire Montavon, who is taking up a BIFOLD-Charité professorship on April 1, 2025. The professorship is one of the several planned BIFOLD professorships at Charité – Universitätsmedizin Berlin, the institutional partner of BIFOLD.

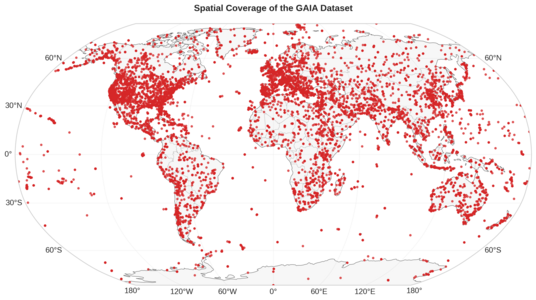

Interpreting Earth with GAIA

Angelos Zavras, PhD candidate at the Harokopio University of Athens, used his short-term research grant from the DAAD to spend time in Berlin as part of the Remote Sensing research group at BIFOLD. Together with Prof. Begüm Demir and her team he developed GAIA.

Mascara: Protecting sensitive Data

Researchers from Volker Markl’s team Database Systems and Information Management introduce “Mascara”, a novel access control middleware designed to manage partial data access while protecting privacy through anonymization. The corresponding paper “Disclosure-Compliant Query Answering” was accepted for SIGMOD 2025.

EDBT/ICDT 2025 Conference Contributions

Four BIFOLD research groups will take part in the EDBT/ICDT 2025 data management conference. They will present seven publications, including one that received the EDBT 2025 Best Paper Award. The conference is scheduled for 25–28 March 2025 in Barcelona, Spain.

The Hidden Domino Effect

A team of BIFOLD researchers discovered that foundation models such as GPT, Llama, CLIP etc., trained by unsupervised learning methods, often produce compromised representations from which instances can be correctly predicted, although only supported by data artefacts. In a Nature Machine Intelligence publication the researchers propose an explainable AI technique that is able to detect the false prediction strategies.

Data Systems Retreat

For the first time, researchers from four data systems groups at BIFOLD and TU Berlin came together for a joint offsite retreat in Wandlitz. This unique gathering fostered collaboration, networking, and idea exchange among experts, marking a milestone in interdisciplinary research.

Multiple Awards for BIFOLD Researchers

The BTW 2025 proved as very successful for BIFOLD researchers: Not only won one of our alumni the Best Dissertation Award: Two additional BIFOLD groups were also honored.

Insight into Deep Generative Models with Explainable AI

The just completed agility project "Understanding Deep Generative Models" focused on making ML models more transparent, in order to uncover hidden flaws in them, a research area known as Explainable AI.