A Global, Multi-modal, Multi-scale Vision-Language Dataset for Remote Sensing Image Analysis

Angelos is currently enrolled as a PhD candidate at the Harokopio University of Athens. A short-term research grant from the DAAD enabled him to spend time in Berlin as part of the Remote Sensing research group at BIFOLD. He is also affiliated with the OrionLab research group, which is associated with the Remote Sensing Lab of the National Technical University of Athens (NTUA) and the National Observatory of Athens (NOA). In the past he was involved for several years in the European Space Agency’s (ESA) Copernicus programme, initially as a DevOps Engineer and later as the Lead Copernicus Sentinels Data Access Operations Engineer of the Greek node of European Space Agency Hubs.

How did this collaboration come about?

Angelos Zavras: “The fact that my research group OrionLab led by Professor Ioannis Papoutsis and Professor Demir's group (RSiM) have interacted in various scientific contexts before, led to the idea of me applying for the DAAD short-term research grant. I joined BIFOLD and RSiM in Berlin starting from September 2024 until February 2025. This is when together with my research group in Athens (OrionLab) and the team in Berlin, we developed GAIA.”

Begüm Demir: "It was not only a pleasure to welcome Angelos in our group, it was also a scientifically very valuable cooperation."

What led you to the development of the GAIA dataset?

Angelos Zavras: "Earth-orbiting satellites generate an ever-growing amount of RS data. Natural language offers an intuitive way to access, query, and interpret this data. However, existing Vision-Language Models (VLMs), trained mostly on noisy web data, struggle with the specialized RS domain. They often lack detailed, scientifically accurate descriptions, focusing instead on basic attributes like date and location. This limits their applicability on crucial RS downstream tasks."

That's where GAIA comes in, right? Professor Demir, can you describe GAIA and how it addresses this challenge?

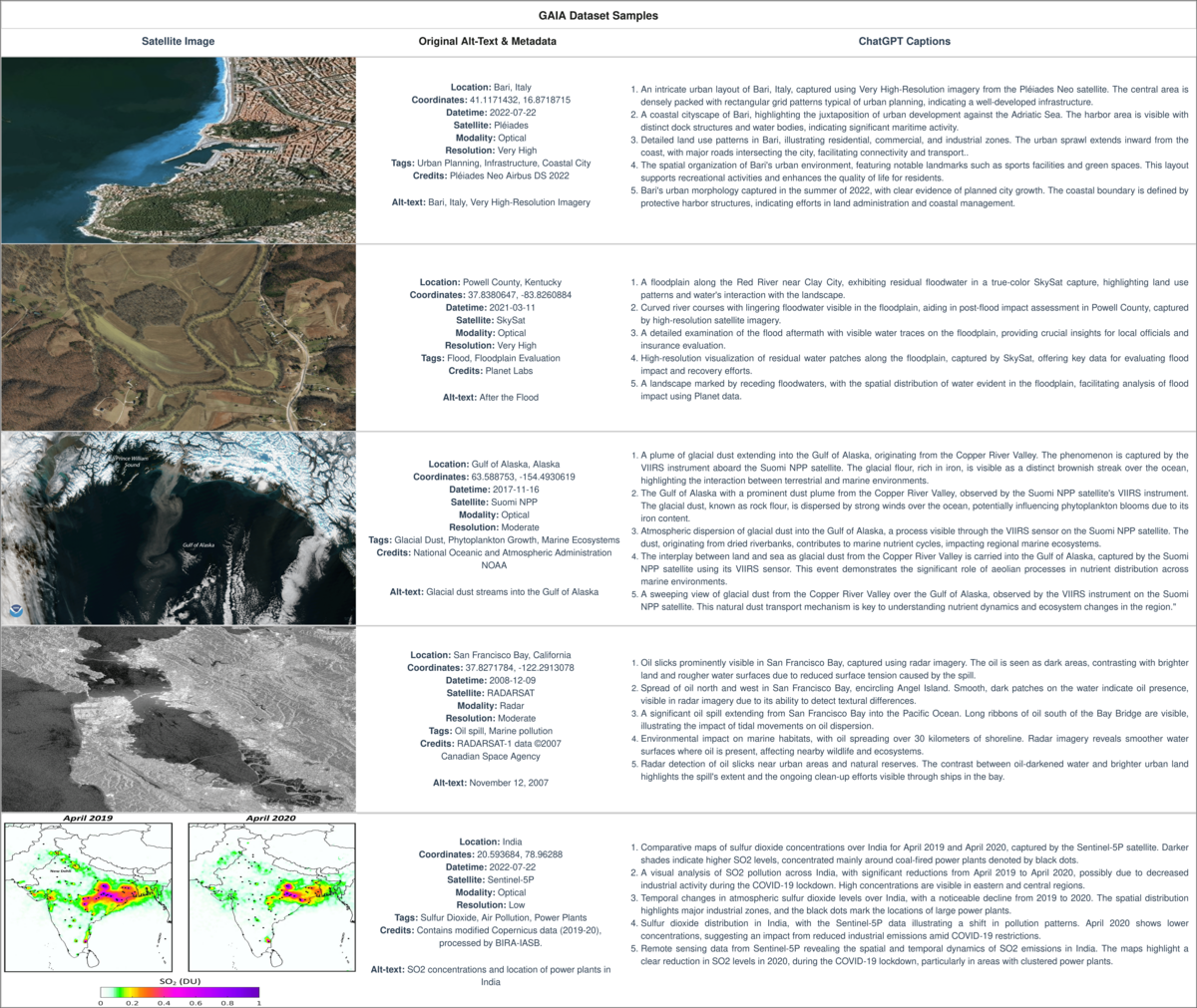

Begüm Demir: "GAIA is a novel dataset designed specifically for multi-scale, multi-sensor, and multi-modal RS image analysis. It's a collection of 205,150 carefully curated RS image-text pairs, representing a diverse range of RS modalities and spatial resolutions. Unlike existing datasets, GAIA focuses on capturing information about environmental changes, natural disasters, and other dynamic phenomena. It's globally and temporally balanced, covering the last 25 years."

Angelos, can you walk us through the process of creating GAIA?

Angelos Zavras: "GAIA's construction was a two-stage process. First, we performed targeted web-scraping of images and text from reputable RS-related sources, like NASA and ESA websites. Then, and this is key, we generated 5 high-quality, scientifically grounded synthetic captions for each RS image, leveraging its advanced vision-language capabilities of GPT-4o. We've also released the automated processing framework we developed, so that the wider research community can generate their own captions from web-crawled RS data."

Professor Demir, what are the results of your experiments with GAIA? What makes GAIA a game changer?

Begüm Demir: "GAIA is a crucial resource for advancing the field, offering a unique combination of scale, diversity, and scientific accuracy that was previously lacking. Our extensive experiments demonstrate that GAIA significantly improves performance on RS image classification, cross-modal retrieval, and image captioning tasks. The synthetic, yet scientifically-grounded captions have been proven particularly valuable, providing a much richer semantic context compared to the web-crawled alt-text captions."

The joint research is currentlysubmitted for publication.

Paper: https://arxiv.org/abs/2502.09598

GitHub: https://github.com/Orion-AI-Lab/GAIA

HuggingFace: https://huggingface.co/datasets/azavras/GAIA