Making ML models more transparent

With the likes of ChatGPT, DALL-E, DeepSeek, etc., deep generative models represent a crucial development in machine learning. While machine learning (ML) has traditionally focused on well-specified tasks such as classification, regression, or ranking, generative models can predict much more diverse outcomes, such as synthesizing images or new molecules, or producing textual responses to a user prompt.

In practice, it is crucial to make ML models more transparent, e.g. to uncover hidden flaws in them, a research area known as Explainable AI. As part of the recently completed agility project “Understanding Deep Generative Models” BIFOLD researchers Prof. Dr. Grégoire Montavon, Prof. Dr. Wojciech Samek and Prof. Dr. Klaus-Robert Müller have focused precisely on this topic. They concentrated on the explainability of two different aspects of generative models: the output of the generative model (i.e., why a particular instance was generated in a specific way) and the internal representation of the model (which is used as an intermediate step in the generation process).

“We researched how to extend Explainable AI techniques to two major generative model architectures: Transformers and Mamba. We contributed a new approach that takes layer-wise relevance propagation (LRP), a well-known Explainable AI technique, and rethinks its propagation mechanism to handle the peculiar nonlinearities of Transformers and Mamba”, explains Grégoire Montavon.

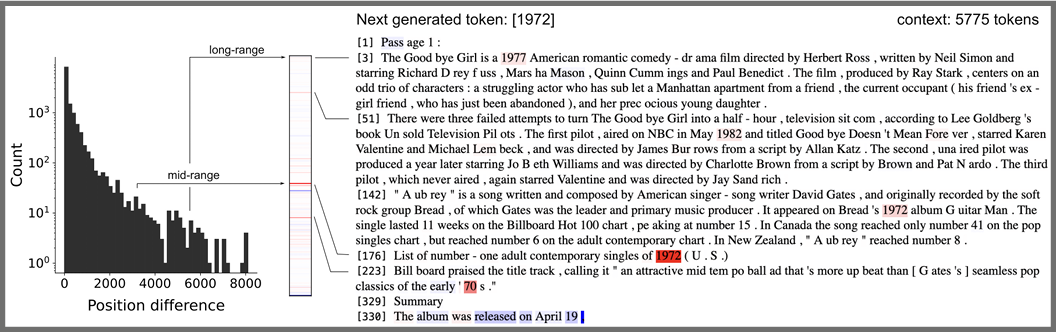

With this new method, BIFOLD researchers were able to answer a key question about Mamba generative models, namely whether they really exploit their long-range capabilities on real data. Their analysis gave a positive answer, showing that the text Mamba generates is sometimes based on information that appears several thousand words earlier. The output of the LRP analysis is shown in the Figure (above) which highlights how Mamba uses information that appears much earlier in the text (such as references to specific years) to generate the next words in the sentence.

Deep generative models, and deep learning in general, rely on abstract intermediate representations. The better the representation, the better the model. To shed light on deep representations, BIFOLD researchers developed BiLRP, an extension of LRP that can explain why two data points look similar or dissimilar in representation space. A peculiarity of BiLRP is that it goes beyond the classical highlighting of relevant features and instead reveals how features interact to produce a high similarity score. It was found using BILRP that similarity measurements performed on the representations of popular pretrained neural networks such as VGG-16 are occasionally "right for the wrong reasons", for example, rotated copies of the same image rightly appear similar, but due to a small circle in the center of the image that remained unchanged after rotation, as shown in the Figure (left). In a follow-up work, the BiLRP method was applied to the newer and larger CLIP image model, which is a possible starting point for various generative tasks such as image-based text generation. Here again, unexpected strategies based on matching text artifacts in the input images were uncovered.

“Our work has laid a foundation for understanding key components of modern generative AI systems. Our method is formulated at an abstract level, allowing future potential applications to other data or generative models, whether they produce text, images, or something else. Our research also paves the way for building more robust, reliable and transparent AI systems”, summarizes Grégoire Montavon.

Publications

- F Rezaei Jafari, G Montavon, KR Müller, O Eberle. MambaLRP: Explaining Selective State Space Sequence Models. Advances in Neural Information Processing Systems (NeurIPS), 2024

- R Achtibat, S Hatefi, M Dreyer, A Jain, T Wiegand, S Lapuschkin, W Samek. AttnLRP: Attention-Aware Layer-Wise Relevance Propagation for Transformers. International Conference on Machine Learning (ICML), 2024

- A Ali, T Schnake, O Eberle, G Montavon, KR Müller, L Wolf. XAI for Transformers: Better Explanations through Conservative Propagation. International Conference on Machine Learning (ICML), 2022

- O Eberle, J Büttner, F Kräutli, KR Müller, M Valleriani, G Montavon. Building and Interpreting Deep Similarity Models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(3):1149-1161, 2022

- J Kauffmann, J Dippel, L Ruff, W Samek, KR Müller, G Montavon. The Clever Hans Effect in Unsupervised Learning. arXiv:2408.08041, 2024