Dr. Thorsten Eisenhofer presents secure data deletion for AI Models

Can machines forget – and prove it?

BIFOLD researcher Dr. Thorsten Eisenhofer will contribute one research paper to the 3rd IEEE Conference on Secure and Trustworthy Machine Learning (IEEE SaTML 2025). The conference, which will be held from April 9th to 11th, 2025, in Copenhagen, expands on the theoretical and practical understandings of vulnerabilities inherent to machine learning, explores the robustness of learning algorithms and systems, and aids in developing a unified, coherent scientific community that aims to establish trustworthy machine learning.

Thorsten Eisenhofer is a postdoctoral researcher at BIFOLD, in the Machine Learning and Security group chaired by Konrad Rieck. He investigates machine learning and computer security from a systems security perspective.

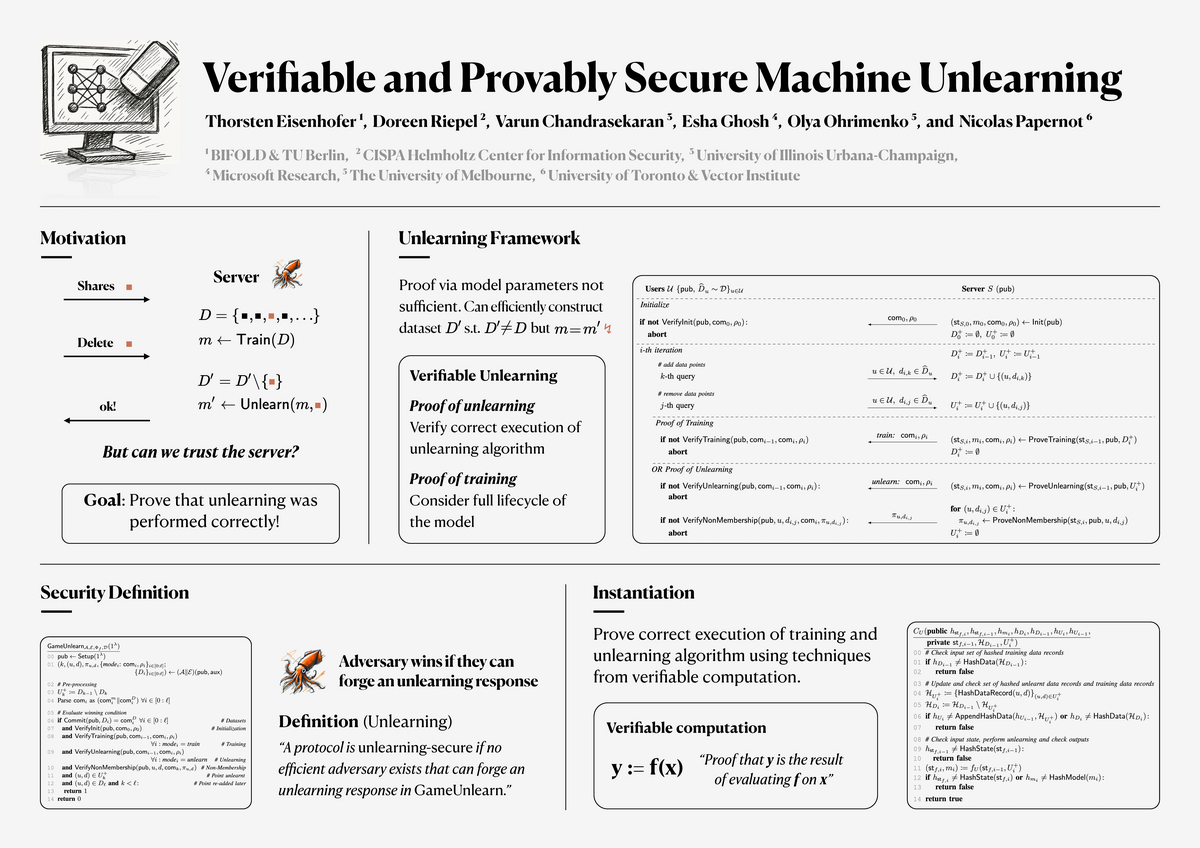

The paper proposes a framework for verifiable machine unlearning, where users can request the deletion of their data from machine learning models and receive cryptographic proofs that the data was removed. The framework aims to provide formal guarantees of data deletion and prevent dishonest service providers from falsifying unlearning processes.

PDF, Code