Prof. Dr. Konrad Rieck

Research Group Lead

Konrad Rieck is a professor at TU Berlin, where he leads the Chair of Machine Learning and Security as part of the Berlin Institute for the Foundations of Learning and Data (BIFOLD). Previously, he held academic positions at TU Braunschweig, the University of Göttingen, and Fraunhofer Institute FIRST. His research focuses on the intersection of computer security and machine learning. He has published over 100 papers in this area and serves on the PCs of the top security conferences (aka the system security circus).

Recent Project

- ERC Consolidator Grant: "MALFOY: Machine Learning for Offensive Computer Security"

- Excellence Cluster CASA: "TELLY: Testing the Limits of Machine Learning in Vulnerability Discovery

- Excellent Cluster CASA: "PACO: Analysis and Discovery of Parser-Confusion Vulnerabilities"

- DFG Project: "ALISON: Attacks against Machine Learning in Structured Domains"

- USENIX Security Distinguished Paper Award 2022

- ERC Consolidator Grant 2021

- AISEC Best Paper Award 2021

- Winner of Microsoft MLSEC competition 2020

- LehrLeo: Best Lecture at TU Braunschweig 2019

- LehrLeo: Best Lab Course at TU Braunschweig 2019

- German Prize for IT-Security 2016 (2nd Place)

- DIMVA Best Paper Award 2016

- Google Faculty Research Award 2014

- ACSAC Outstanding Paper Award 2012

- CAST/GI Dissertation Award IT-Security 2010

- Intelligent Security Systems

- Attack Detection and Prevention

- Malware and Vulnerability Analysis

- Adversarial Machine Learning

- BMBF Plattform Lernende Systeme

- European Laboratory for Learning and Intelligent Systems (ELLIS)

- Gesellschafft für Informatik

- Forum InformatikerInnen für Frieden und gesellschaftliche Verantwortung

Thorsten Eisenhofer, Erwin Quiring, Jonas Möller, Doreen Riepel, Thorsten Holz, Konrad Rieck

No more Reviewer #2: Subverting Automatic Paper-Reviewer Assignment using Adversarial Learning

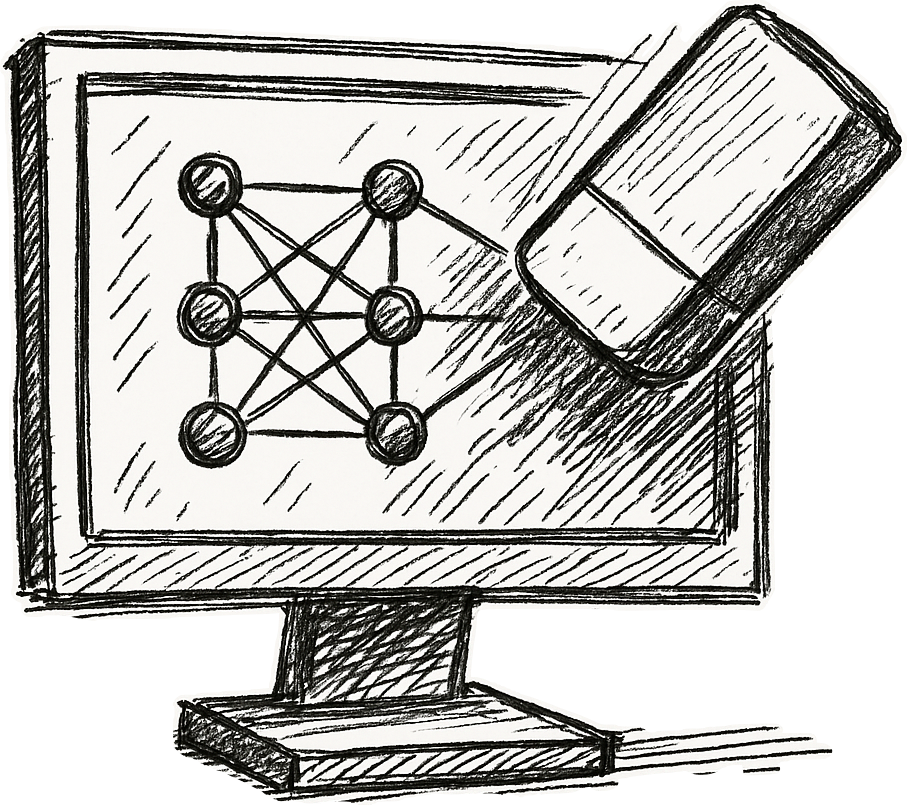

Alexander Warnecke, Lukas Pirch, Christian Wressnegger, Konrad Rieck

Machine Unlearning of Features and Labels

Daniel Arp, Erwin Quiring, Feargus Pendlebury, Alexander Warnecke, Fabio Pierazzi, Christian Wressnegger, Lorenzo Cavallaro, Konrad Rieck

Dos and Don’ts of Machine Learning in Computer Security

Alexander Warnecke, Daniel Arp, Christian Wressnegger, Konrad Rieck

Evaluating Explanation Methods for Deep Learning in Security

Erwin Quiring, David Klein, Daniel Arp, Martin Johns, Konrad Rieck

Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning

Test-of-Time Award for Konrad Rieck

Congratulations to BIFOLD Research Group Lead Konrad Rieck and his former colleagues. The Annual Computer Security Applications Conference (ACSAC) awarded the scientists the Test-of-Time Award for their publication "CUJO: Efficient Detection and Prevention of Drive by Download Attacks" (2010).

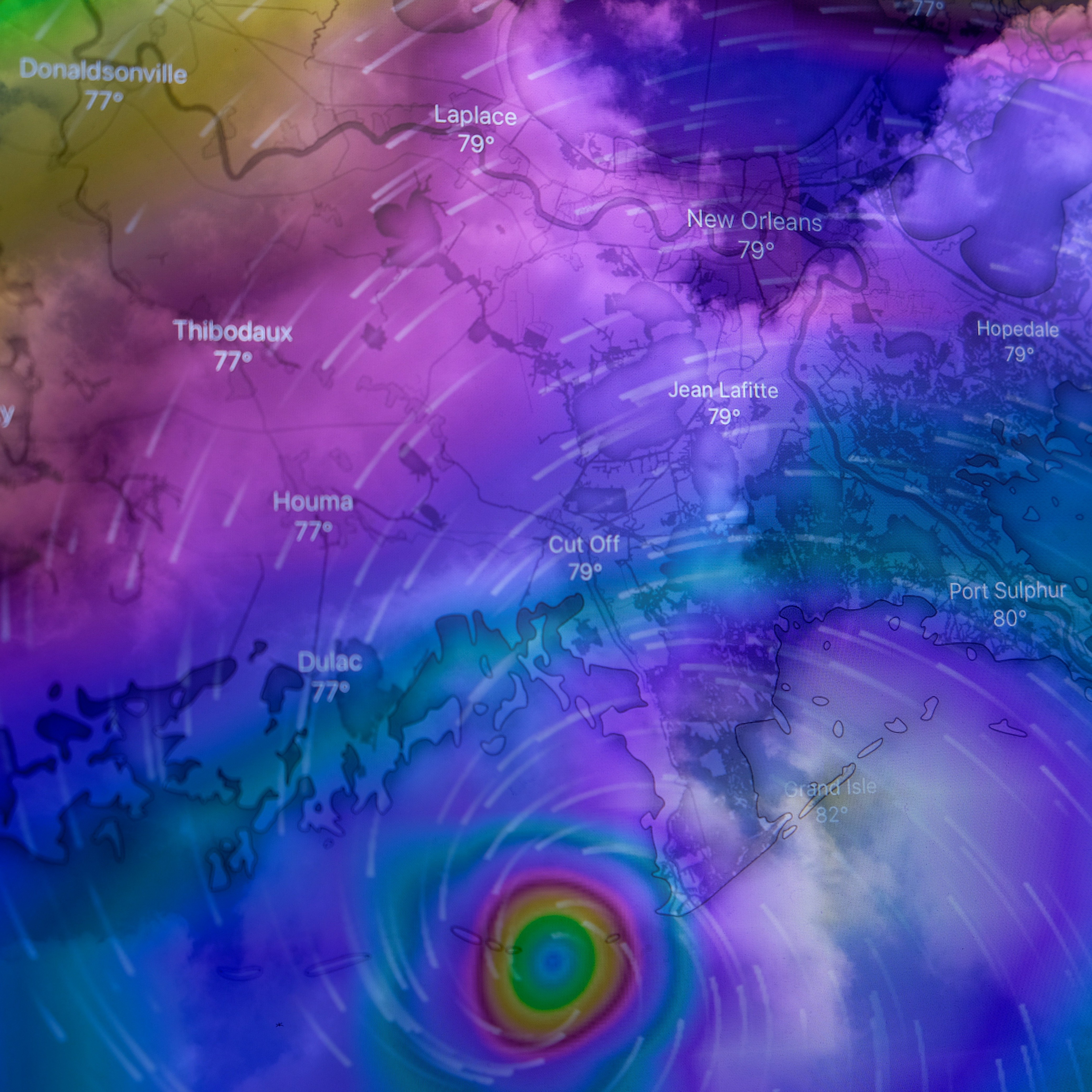

ACM CCS 2025: Distinguished Paper Award

Congratulations to BIFOLD researchers Erik Imgrund, Thorsten Eisenhofer and Konrad Rieck from the ML Sec group, whose paper “Exposing Security Risks in AI Weather Forecasting” received a Distinguished Paper Award at the ACM Conference on Computer and Communications Security (CCS) 2025.

Attacking privacy leaks in virtual backgrounds

Peeking through the virtual curtain: A new study by the BIFOLD MLSEC group reveals that current virtual backgrounds in video calls can leak enough pixels from the environment to reconstruct objects in the background.

USENIX 2025 Conference Contributions

BIFOLD researchers from the MLSec group will present two papers at the 34th USENIX Security Symposium (Aug 13–15, 2025, Seattle). One paper shows that virtual backgrounds in video calls can unintentionally reveal parts of a user’s real surroundings, exposing them to privacy risks.

Even the smallest number can make a big difference

Minor deviations in backend libraries like CUDA or MKL can cause identical AI models to produce different outputs. At ICML 2025, BIFOLD researcher Konrad Rieck showed how such subtle imprecisions can be exploited—posing a significant risk to AI system security.

IEEE SaTML 2025 Conference Contribution

Dr. Thorsten Eisenhofer will present the paper “Verifiable and Provably Secure Machine Unlearning,” at SaTML 2025. Eisenhofer is Postdoc in the research group “Machine Learning and Security”. His paper introduces a new framework designed to verify that user data has been correctly deleted from machine learning models, supported by cryptographic proofs.

Machine Learning Backdoors in Hardware

So-called backdoor attacks pose a serious threat to machine learning, as they can compromise the integrity of security-critical AI systems, such as those used in autonomous driving or healthcare.

Learning from the Best

Dr. Anne Josiane Kouam is researching mobile security and privacy at BIFOLD. She's been selected as one of 200 promising young mathematicians and computer scientists to spend a week with leading experts in the field.

BIFOLD researchers present four papers at ASIACCS 2024

The 19th ACM ASIA Conference on Computer and Communications Security (ASIACCS 2024) will take place in Singapore from July 1 to July 5, 2024. The conference will focus on specific areas of computer science, such as information security and information privacy.

IEEE Test-of-Time Award for Konrad Rieck

Together with his former PhD student Fabian Yamaguchi and the entire team, Konrad Rieck won the Test-of-Time Award at the IEEE Symposium on Security and Privacy for their paper: "Modeling and Discovering Vulnerabilities with Code Property Graphs ". The IEEE Symposium on Security and Privacy is the oldest and most important conference in computer security. Each year, the "Test-of-Time Award" is given to papers that significantly influenced research and can be described as groundbreaking.

Researcher Spotlight: Dr. Alexander Warnecke

Congratulations to Dr. Alexander Warnecke who succesfully defended his PhD "Security Viewpoints on Explainable Machine Learning" on April 16, 2024.

Project Launch AIgenCY

With the innovative research project "AIgenCY - Opportunities and Risks of Generative AI in Cybersecurity," leading experts from academia and industry take on the challenge of exploring the implications of generative artificial intelligence (AI) for cybersecurity.

Email security under scrutiny: Examining SPF weaknesses

Cyber security researchers at BIFOLD found weaknesses in Sender Policy Framework (SPF) records, which protect email users from forged senders. The paper “Lazy Gatekeepers: A Large-Scale Study on SPF Configuration in the Wild” includes an analysis of 12 million domains’ SPF records and was now presented at the 2023 ACM Internet Measurement Conference.

Adversarial Papers: New attack fools AI-supported text analysis

Security researchers have found significant vulnerabilities in learning algorithms used for text analysis: With the help of a novel attack, they were able to show that topic recognition algorithms can be fooled by even small changes in words, sentences and references.

BIFOLD welcomes Israel delegation

Among other institutions the BIFOLD hosted a delegation from Israeli universities as part of Germany's "Willkommen" Visitors Programme. Various BIFOLD researchers gave a short introduction to their research foci in AI. The “Willkommen” programme invites opinion leaders to experience Germany and gain a nuanced understanding of the country.

Machine Learning and Security

Welcome to Prof. Dr. Konrad Rieck, who heads the new workgroup Machine Learning and Security at BIFOLD and TU Berlin and started January 1, 2023.

Cybersecurity under scrutiny

In cybersecurity research, machine learning (ML) has emerged as one of the most important tools for investigating security-related problems: However, a group of European researchers from TU Berlin, TU Braunschweig, University College London, King’s College London, Royal Holloway University of London, and Karlsruhe Institute of Technology (KIT)/KASTEL Security Research Labs, led by BIFOLD researchers from TU Berlin, have shown recently that research with ML in cybersecurity contexts is often prone to error.

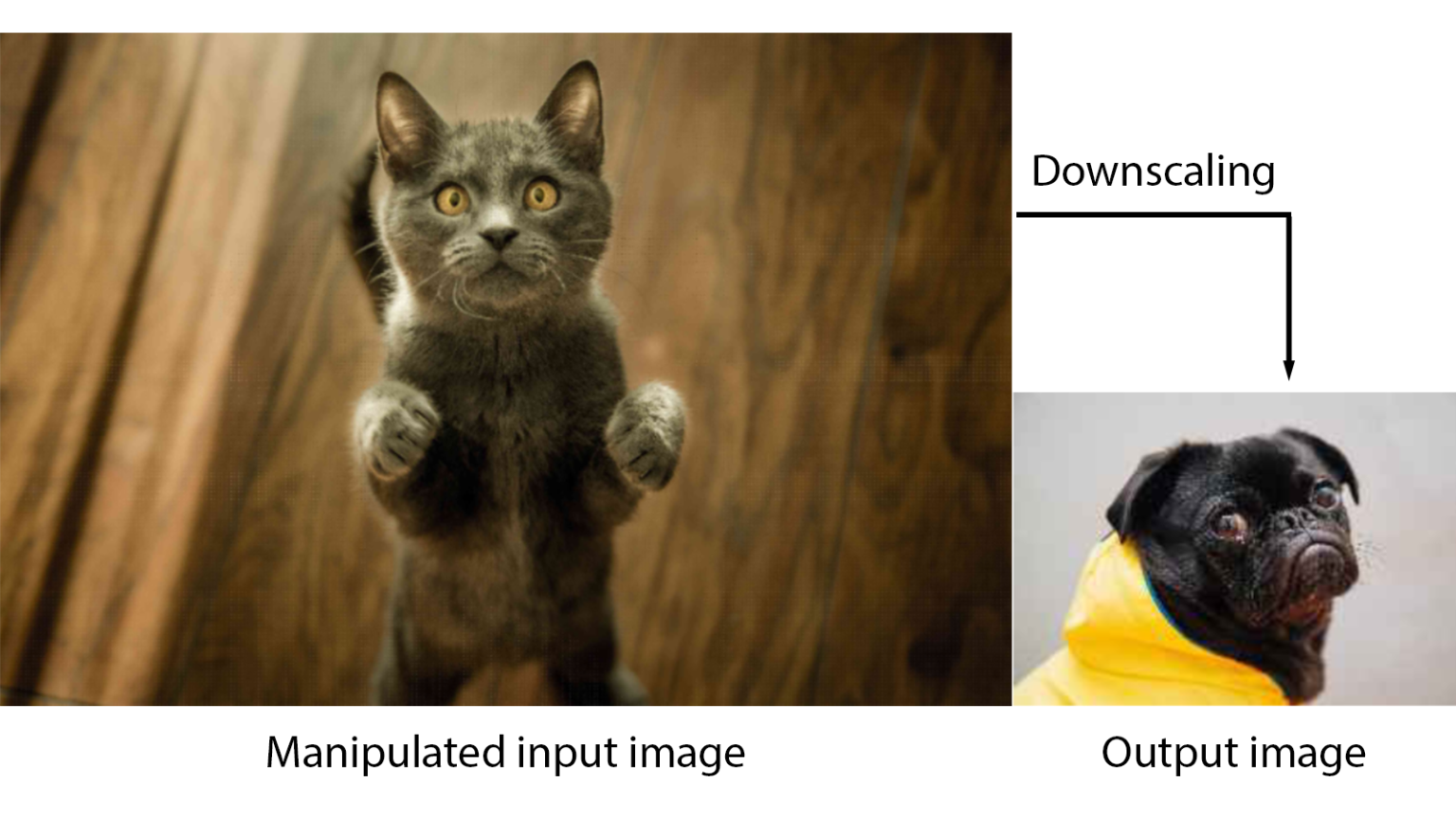

Preventing Image-Scaling attacks on Machine Learning

BIFOLD Fellow Prof. Dr. Konrad Rieck, head of the Institute of System Security at TU Braunschweig, and his colleagues provide the first comprehensive analysis of image-scaling attacks on machine learning, including a root-cause analysis and effective defenses. Konrad Rieck and his team could show that attacks on scaling algorithms like those used in pre-processing for machine learning (ML) can manipulate images unnoticeably, change their content after downscaling and create unexpected and arbitrary image outputs. The work was presented at the USENIX Security Symposium 2020.