The lack of transparency of deep neural networks in combination with the widespread usage of complex models in practice restrains the applicability of AI, particularly in safety-critical areas. In recent years, a number of novel explanatory methods were introduced with the aim to explain the decision-strategy behind predictions of black-box models. Explanations are often presented as heatmaps – highlighting features of the input that contributed strongly to the prediction, e.g. in automatic image classification. In the paper “NoiseGrad – Enhancing Explanations by Introducing Stochasticity to Model Weights,” to be presented at the 36th AAAI-22 Conference on Artificial Intelligence, a team of researchers, among them BIFOLD researchers Dr. Marina Höhne, Shinichi Nakajima, PhD, and Kirill Bykov, propose new methods, which have shown to make existing explanation methods more robust and reliable, by reducing visual diffusion of the explanations.

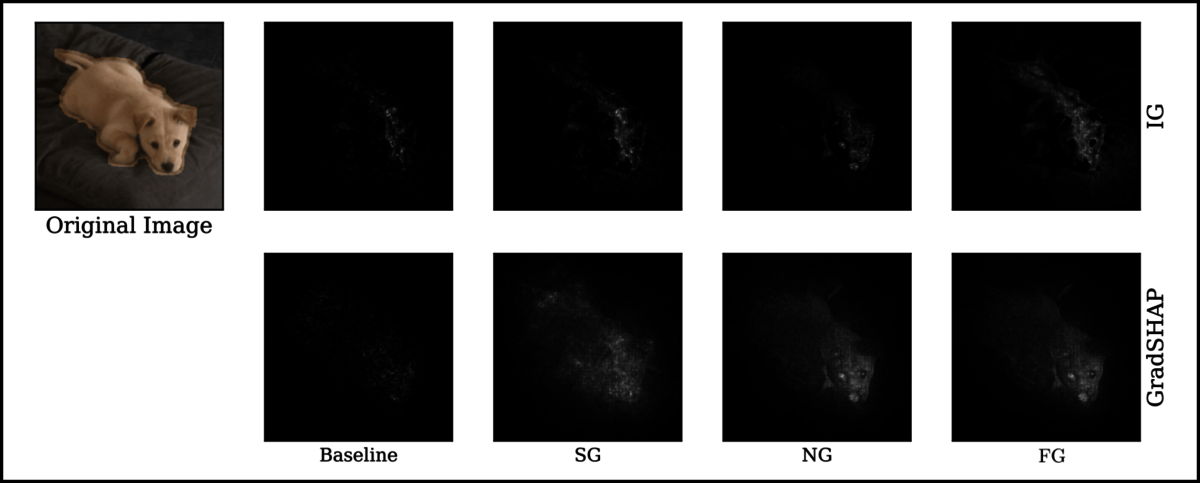

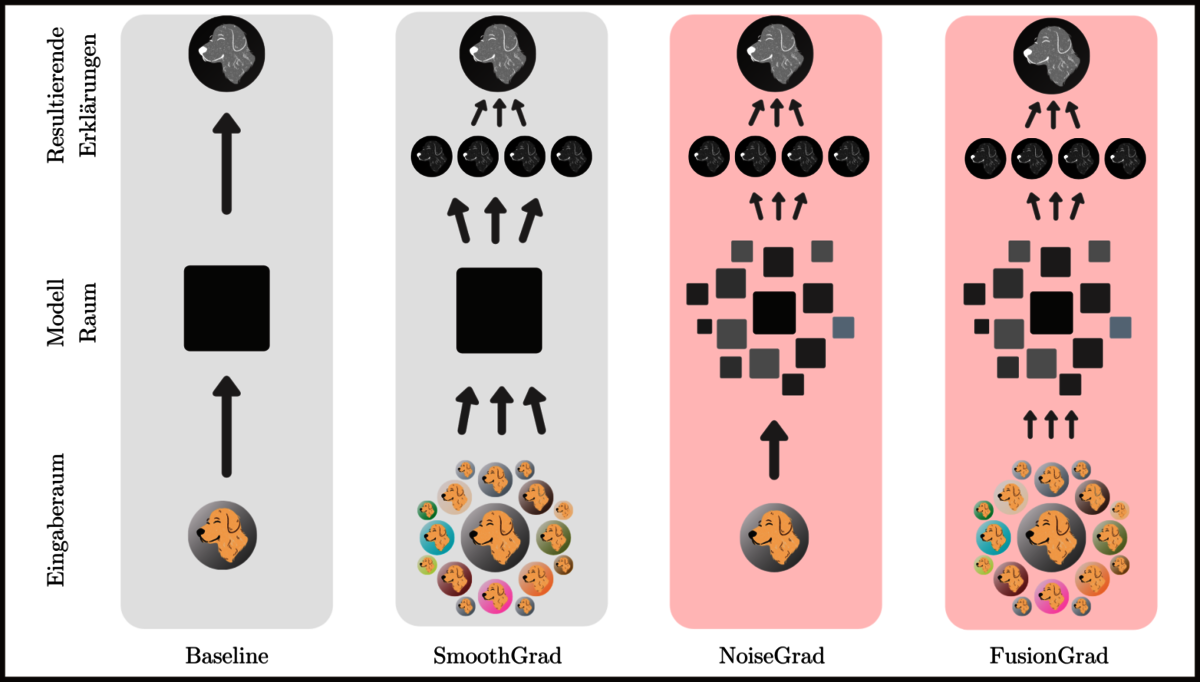

A number of different methods were established in the past to improve the explanatory capacity of such models. One of these processes, SmoothGrad, applied stochasticity to the input. In this process, the original input image is modified by adding so-called “noise”. In machine learning, the term “noise” is used to refer to an introduced variability of the data points. In our example, individual image pixels are slightly altered. This creates many slightly different (noisy) copies of the same image. These noisy copies are then “fed” into the AI model, which provides a prediction for each individual noisy image copy, which in turn is converted into an explanation using the explanatory method. Finally, an average is taken over all the explanations of the noisy input images, so that in the end only those features are highlighted that are decision-relevant in the average of all copies.

The BIFOLD research team approached the problem from a different direction. “In the method we propose, NoiseGrad, we do not alter the input image but rather lay the noise over the explanation model. In the end, this means that we generate many, slightly different copies of the original models by adding noise to the individual parameters and thereby develop many, slightly shifted decision strategies. Then we analyze the original image with each of these slightly different noisy models and average them for the final explanation,” Bykov explains.

While the team has been able to demonstrate that NoiseGrad represents a significant improvement for explanation models in terms of their ability to localize objects of interest, reliability and robustness, a combination of the two approaches (FusionGrad), i.e. both the variation of the input image combined with the variation of the algorithm parameters, could significantly further stabilize explanation methods. Anna Hedström, the other lead author of the paper and doctoral candidate at TU Berlin, explains: “What is interesting about our procedure is that it not only improves existing methods of explainable AI, but that our methods are designed to be applicable to a wide range of models, explanation methods and domains — regardless of whether the input is an image, text, or number. By using Quantus, a toolkit, we developed recently to assess the quality of explanations, we were able to show that our methods -NoiseGrad and FusionGrad – boosted explainable AI methods in several tested evaluation criteria, which quantitatively underlines the advantages of our methods.”

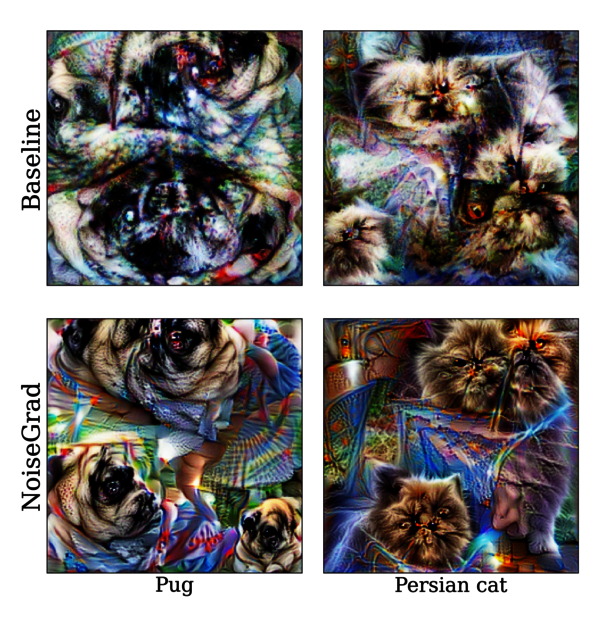

The scientists could also show, that NoiseGrad enhances global explanation methods, such as Activation Maximization. Activation Maximization generates an artificial image via an optimization problem, that maximizes a certain output neuron. The Figure below shows the maximization of the output neuron of the class Pug (first column) and Persian cat (second column). As a result Activation Maximization visualizes the concept that the model has learned for the specific class, in a humanly understandable fashion.

“NoiseGrad (NG) enhances global explanations for Activation Maximization, which produces an artificial image, that maximally activates a specific neuron of the model, here the output neuron of the class pug (first column) and Persian cat (second column). It visualizes, in a humanly understandable fashion, the concept that the model has learned for the specific class. From the upper row we can observe the Baseline results, applying Activation Maximization to the trained model. In the bottom row, the results are shown using NoiseGrad for Activation Maximization: It generates an artificial image, that maximizes the output neurons of the respective class for all noisy versions of the model at the same time. Comparing the Baseline results with the NoiseGrad results, we can significantly observe that NoiseGrad enhances the abstract visualization of the class object learned by the model.”

Dr. Marina Höhne

The Publications in detail:

Kirill Bykov, Anna Hedström, Shinichi Nakajima, Marina M.-C. Höhne: “Enhancing Explanations by Introducing Stochasticity to Model Weights“

Anna Hedström, Leander Weber, Dilyara Bareeva, Franz Motzkus, Wojciech Samek, Sebastian Lapuschkin, Marina M.-C. Höhne: “Quantus: An Explainable AI Toolkit for Responsible Evaluation of Neural Network Explanations” & Quantus

Many efforts have been made for revealing the decision-making process of black-box learning machines such as deep neural networks, resulting in useful local and global explanation methods. For local explanation, stochasticity is known to help: a simple method, called SmoothGrad, has improved the visual quality of gradient-based attribution by adding noise in the input space and taking the average over the noise. In this paper, we extend this idea and propose NoiseGrad that enhances both local and global explanation methods. Specifically, NoiseGrad introduces stochasticity in the weight parameter space, such that the decision boundary is perturbed. NoiseGrad is expected to enhance the local explanation, similarly to SmoothGrad, due to the dual relationship between the input perturbation and the decision boundary perturbation. Furthermore, NoiseGrad can be used to enhance global explanations. We evaluate NoiseGrad and its fusion with SmoothGrad – FusionGrad – qualitatively and quantitatively with several evaluation criteria, and show that our novel approach significantly outperforms the baseline methods. Both NoiseGrad and FusionGrad are method-agnostic and as handy as SmoothGrad using simple heuristics for the choice of hyperparameter setting without the need of finetuning.

Many efforts have been made for revealing the decision-making process of black-box learning machines such as deep neural networks, resulting in useful local and global explanation methods. For local explanation, stochasticity is known to help: a simple method, called SmoothGrad, has improved the visual quality of gradient-based attribution by adding noise in the input space and taking the average over the noise. In this paper, we extend this idea and propose NoiseGrad that enhances both local and global explanation methods. Specifically, NoiseGrad introduces stochasticity in the weight parameter space, such that the decision boundary is perturbed. NoiseGrad is expected to enhance the local explanation, similarly to SmoothGrad, due to the dual relationship between the input perturbation and the decision boundary perturbation. Furthermore, NoiseGrad can be used to enhance global explanations. We evaluate NoiseGrad and its fusion with SmoothGrad – FusionGrad – qualitatively and quantitatively with several evaluation criteria, and show that our novel approach significantly outperforms the baseline methods. Both NoiseGrad and FusionGrad are method-agnostic and as handy as SmoothGrad using simple heuristics for the choice of hyperparameter setting without the need of finetuning.

The evaluation of explanation methods is a research topic that has not yet been explored deeply, however, since explainability is supposed to strengthen trust in artificial intelligence, it is necessary to systematically review and compare explanation methods in order to confirm their correctness.

Until now, no tool exists that exhaustively and speedily allows researchers to quantitatively evaluate explanations of neural network predictions. To increase transparency and reproducibility in the field, we therefore built Quantus – a comprehensive, open-source toolkit in Python that includes a growing, well-organized collection of evaluation metrics and tutorials for evaluating explainable methods. The toolkit has been thoroughly tested and is available under open source license on PyPi.

The evaluation of explanation methods is a research topic that has not yet been explored deeply, however, since explainability is supposed to strengthen trust in artificial intelligence, it is necessary to systematically review and compare explanation methods in order to confirm their correctness.

Until now, no tool exists that exhaustively and speedily allows researchers to quantitatively evaluate explanations of neural network predictions. To increase transparency and reproducibility in the field, we therefore built Quantus – a comprehensive, open-source toolkit in Python that includes a growing, well-organized collection of evaluation metrics and tutorials for evaluating explainable methods. The toolkit has been thoroughly tested and is available under open source license on PyPi.