Leila Arras

Doctoral Researcher

Leila Arras is currently a research associate at the Fraunhofer Heinrich Hertz Institute in the research group of explainable artificial intelligence within the department of artificial intelligence, and member of the BIFOLD graduate school. Among her qualifications she previously earned a M.Sc. in computer science with distinction from the Technical University Berlin with a specialization in machine learning and scalable data science. Her research interests include machine learning, neural networks, interpretability and their application to natural language processing and computer vision. As a researcher she enjoys diving deep into the inner workings of neural networks and developing new methods to render artificial intelligence models more transparent. So far she has been working on broadening the scope of explainable artificial intelligence (XAI) to novel tasks and models, in particular through extending XAI methods to recurrent neural networks for the processing of sequential data. Besides she proposed new quantitative evaluation approaches for the objective assessment and comparison of XAI methods. In a recent collaboration she focused on decomposing probabilistic predictions from a neural network model to identify combinations of potential causes increasing the risk of an outcome.

Research project: “Explaining Artificial Neural Network Predictions: Extension and Evaluation”

- Machine Learning

- Neural Networks

- Explainable Artificial Intelligence

- Natural Language Processing

- Visual Reasoning

Leila Arras, Bruno Puri, Patrick Kahardipraja, Sebastian Lapuschkin, Wojciech Samek

A Close Look at Decomposition-based XAI-Methods for Transformer Language Models

Wojciech Samek, Leila Arras, Ahmed Osman, Grégoire Montavon, Klaus-Robert Müller

Explaining the Decisions of Convolutional and Recurrent Neural Networks. Mathematical Aspects of Deep Learning.

Andreas Rieckmann, Piotr Dworzynski, Leila Arras, Sebastian Lapuschkin, Wojciech Samek, Onyebuchi Aniweta Arah, Naja Hulvej Rod, Claus Thorn Ekstrøm

Causes of Outcome Learning: a causal inference-inspired machine learning approach to disentangling common combinations of potential causes of a health outcome

Leila Arras, Ahmed Osman, Wojciech Samek

CLEVR-XAI: A benchmark dataset for the ground truth evaluation of neural network explanations

Gazing into the Black Box AI

Experts from Fraunhofer HHI and BIFOLD showcased an AI-assisted image evaluation technique at the BMBF Open Day. The demonstration gave an example of how Artificial Intelligence arrives at its decisions and sparked a dialogue on Explainable AI between professionals and attendees. Thanks to everyone who joined us in Berlin.

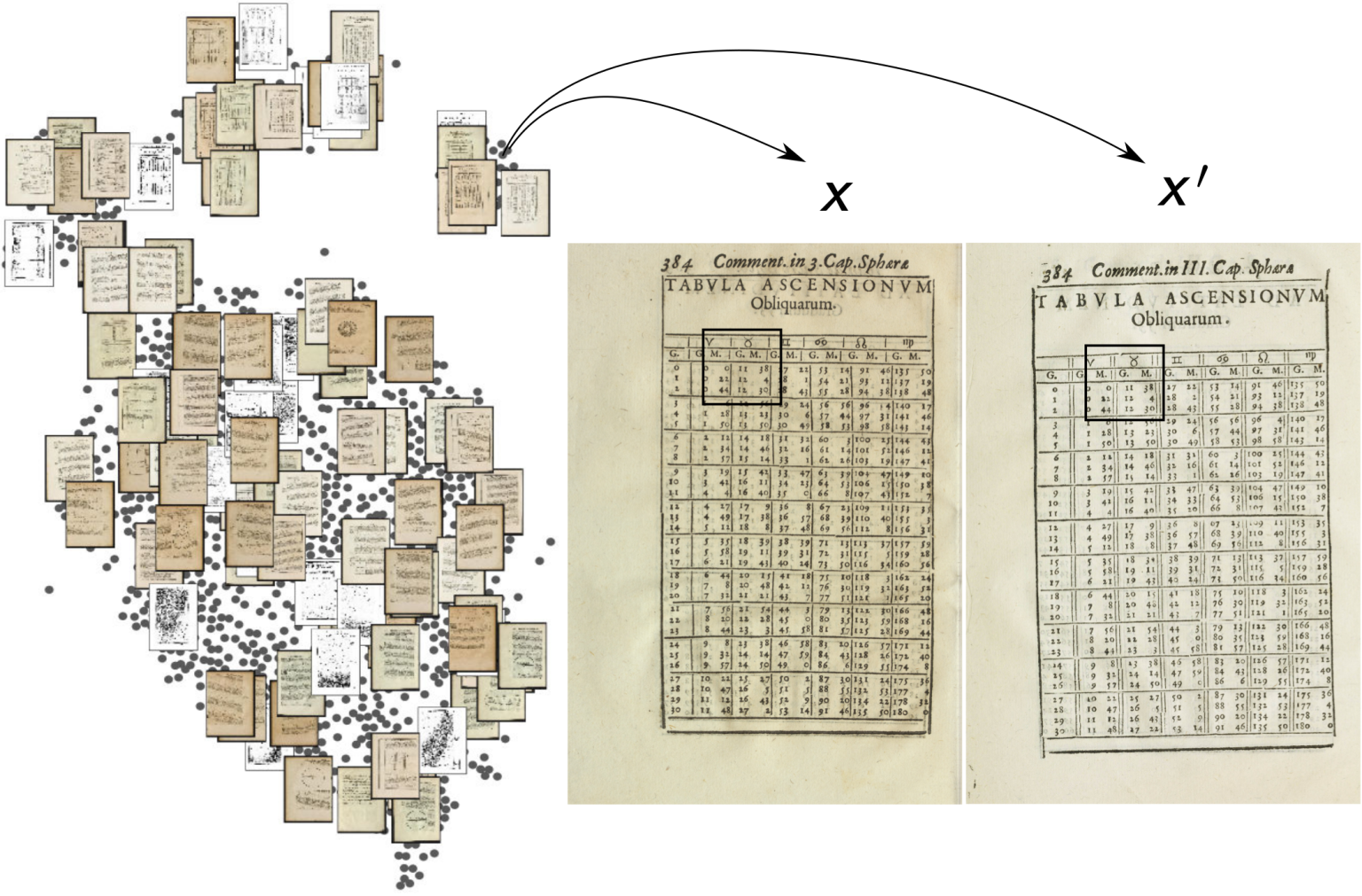

The shared scientific identity of Europe

The project Sphere: Knowledge System Evolution and the Shared Scientific Identity of Europe is one of the leading Digital Humanities projects, exploring a large corpus of more than 350 book editions about geocentric cosmology and astronomy from the early days of printing between the 15th and the 17th centuries (Sphaera Corpus) for about 76.000 pages of material. The relatively large size of this humanities dataset presents a challenge to traditional historical approaches, but provides a great opportunity to computationally explore such a large collection of books. In this regard, the Sphere project is an incubator of multiple Digital Humanities (DH) approaches aimed at answering various questions about the corpus, with the ultimate objective to understand the evolution and transmission of knowledge in the early modern period.