BIFOLD XAI paper proposes a way to robustify neural networks

Modern deep neural network classifiers are rife with biases and unexpected behaviors. One of these behaviors is the so-called “Clever-Hans effect”, where the model has learned to use task-unrelated features (often spuriously correlated with the true signal in the training data) to arrive at a correct classification decision. For example, a network that has been trained to detect different types of animals on images may not be able to recognize a cow standing on a sandy beach, because it has learned to associate the beach background with animals that typically live in this environment. This network may then, for example, rather predict “seal”, mostly due to the background.

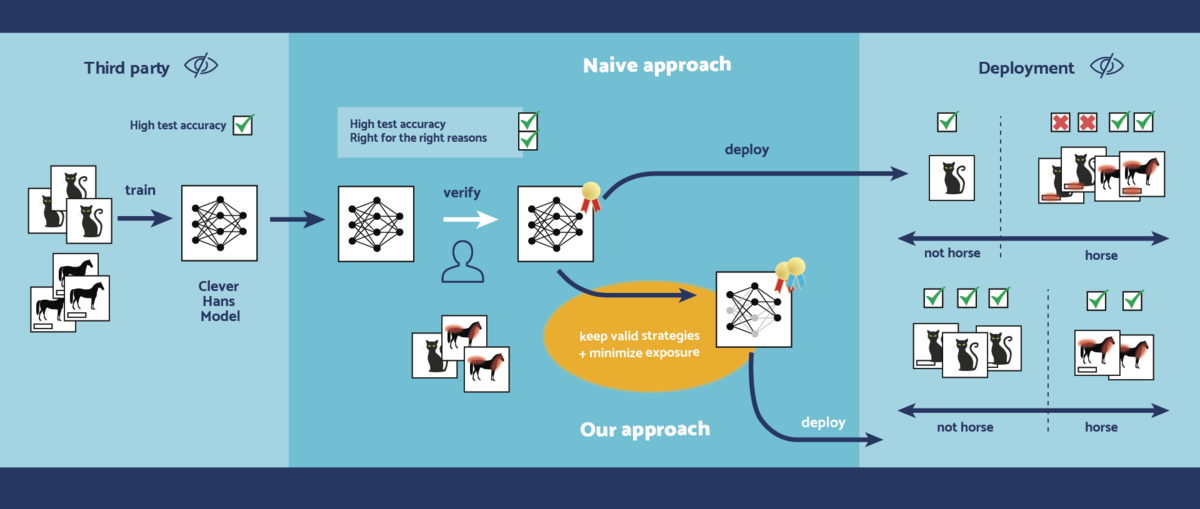

While there exist approaches to de-bias such classifiers, i.e. to “unlearn” unwanted features (such as the background), these approaches typically require knowledge about which features the network has erroneously integrated to its prediction strategy. But in practice, we may not be aware of such biases. In real-world scenarios, where pretrained networks are taken from a third-party and adapted for a specific task, the bias may persist in the model. Taking the example from above, there may be no photos taken on a beach included in the available data at all, so the Clever-Hans effect remains unobserved.

In the paper “Preemptively pruning Clever-Hans strategies in deep neural networks”, the researchers have proposed a way to still robustify neural networks in this scenario where the falsely learned features are unknown. Given the assumption that these features do not exist in the data at hand (i.e. it is “clean” data), the idea is simple: keep all parts of the network that are needed to correctly classify the clean data intact, and remove the rest because they may be representing unwanted features. The principle behind this is as follows: unwanted features are by assumption not present in the clean data. Therefore, the parts of the neural network coding for them are removed. This makes the network less sensitive to unwanted features.

This approach, named Explanation-Guided Exposure Minimization (EGEM), works as follows: First, the available clean data is passed through the network and it is recorded which neurons in the network are active when classification decisions are made. Then, a permanent scaling factor between 0 and 1 is attached to each neuron, depending on the magnitude of its recorded activity. More active neurons get a scaling close to 1 – leaving them almost unchanged – whereas less active neurons are scaled down towards 0. This is called “soft pruning” the network according to the clean data.

In experiments on multiple datasets, researchers observed that this approach and variants of it can indeed mitigate the Clever-Hans effect without requiring additional knowledge of the features to be removed.

For example, considering the popular ISIC 2019 skin lesions dataset: The goal is to classify different types of skin lesions – some being benign, some being malignant – from images. A naively trained network would typically learn to associate colored patches, that are visible on some images in the dataset, with one of the classes. These patches have most likely been put there by the medical staff examining the patient, but are only coincidentally correlated with one of the classes and should not be considered when making any classification. If a newly incoming skin lesion image also contained such a patch, the faulty network would automatically assign it a certain class, without considering the actual skin lesion – clearly the Clever Hans effect at work.

Given a clean subset of the data, EGEM manages to significantly reduce the likelihood of such errors to happen, increasing accuracy when distracting features are present.

In the future, the BIFOLD researchers are planning to improve the approach further by increasing the precision with which falsely learned features are removed, i.e. avoiding any collateral damage to the “useful” neurons, and to apply the general framework to a wider range of applications.

Paper: Preemptively pruning Clever-Hans strategies in deep neural networks

Authors: Lorenz Linhardt, Klaus-Robert Müller, Grégoire Montavon

DOI: https://doi.org/10.1016/j.inffus.2023.102094 (Information Fusion Journal)